What will our factories look like in 2035? You do not have to be a great visionary to envision a state-of-the-art production facility crammed with robots and artificial intelligence (AI). A ‘rise of the machines’ nightmare scenario? Not necessarily, because people will also play an important role in tomorrow’s factories. In fact, if we manage to optimally combine the strengths of man and machine, we may even lay the foundation of a dream marriage that – by 2035 – might signal the start of the fifth industrial revolution. Featuring smart factories in which the focus is not on automation, digitalization and mass production, but on customization and personalization – steered by human creativity.

Industry 5.0: where smart robotics meets human creativity

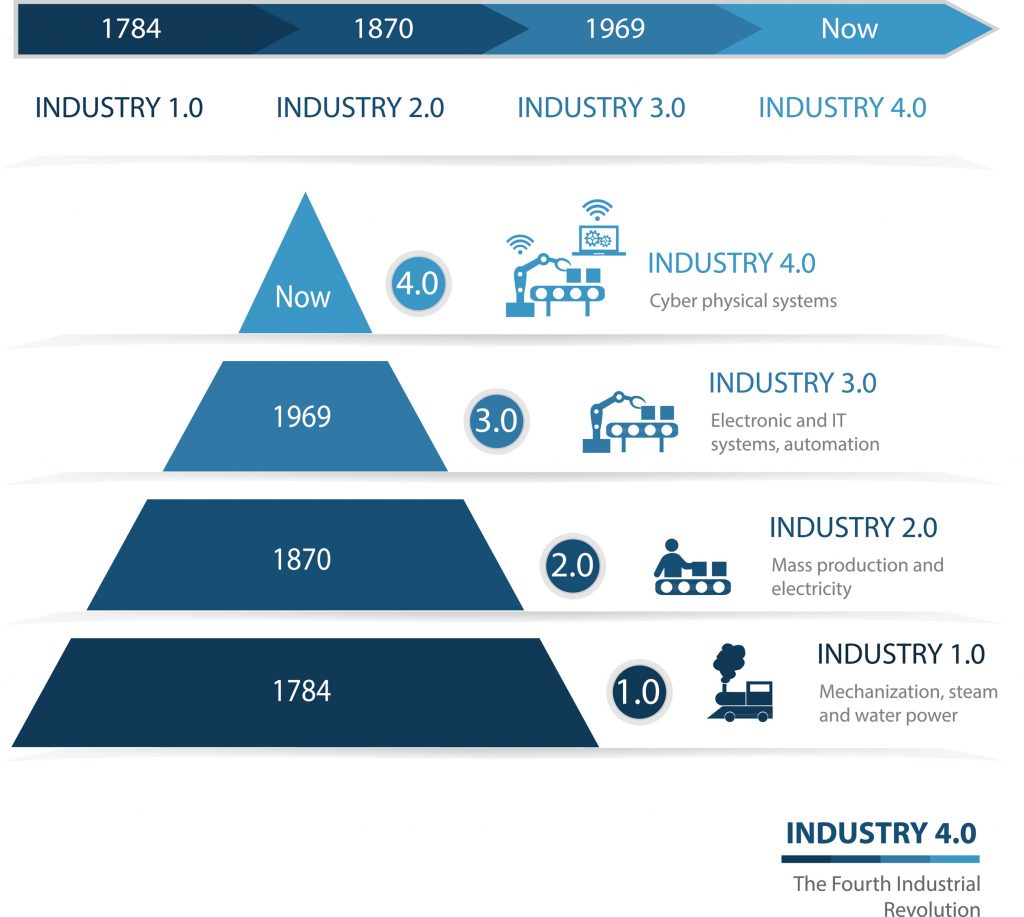

Industry has evolved at breakneck speed in the last 300 years. It all began in the 18th century when the rural societies in Europe and America underwent the process of urbanization and the iron and textile industries started to blossom, in part thanks to the invention of the steam engine.

Just before the First World War, new industries (such as the steel and oil industries) emerged, while the invention of electricity allowed us to start mass-producing goods. That marked the start of Industry 2.0.

Since then, the pace of development has become ever faster. In the 1970s we witnessed the start of Industry 3.0 – featuring digital technology, the automation of industrial processes and the introduction of robots.

And today we are at the dawn of Industry 4.0, which largely builds on the Internet of Things (IoT) revolution: devices of all sorts – including robots – are connected to the Internet and produce a continuous stream of data; data that can be used to generate more insights into industrial processes and to support those processes’ further optimization.

Fig 1: From steam engine to the Internet of Things: industry has evolved at breakneck speed in the last 300 years.

Impressive, right? Of course, we have to add a note of caution about these developments. As automation and optimization have become more important over the years, human involvement has been increasingly threatened…

“Yet, it is precisely this threat that will be ended with the coming of Industry 5.0. In a world in which every individual wants to fully express oneself, there will be increasing demand for unique, customized and personalized products. In such an era, the holy grail will no longer be robot-controlled mass production, but human creativity.”

As such, in the smart factories of 2035, a new collaboration model will need to be put in place. A marriage, you could say, between man and machine – with robots doing the heavy mechanical labor, and with their human co-workers being the ‘creative architects’ (inventing new, custom-made products and overseeing their production in tomorrow’s factories).

The question is: how can you foster a partnership between man and machine in such a setting? How do you forge an optimal pairing, so that 1 + 1 effectively becomes 3? It will all boil down to effective communication between the different parties!

Digital twins for our smart factories?

To give Industry 5.0 every chance of success, it will be crucial to advance communication between the different actors (humans and machines).

Of course, machines already communicate with each other today. For instance, in large car factories, integrators, with the help of standardized protocols, ensure that machines (sometimes from different providers) ‘know’ enough about each other to meet production targets. But let us be honest: in today’s factories, every machine basically does its own bit of (assembly line) work, and little real communication is needed.

In the future, when machines get more autonomous and need to anticipate each other, communication will become more difficult.

“For example: imagine two robots approaching each other on the factory floor. In this situation, how can one robot anticipate how the other is going to move (“Will he go left or right? And what should I do…”)? And that is before taking into account the positions, actions and reactions of other robots nearby…”

To manage this type of situations, you could make a digital copy (or twin) of the factory in the cloud. As such, you create a digital model of the physical factory floor; a model that continuously updates itself based on real-time sensor data; a model where all decisions (and their outcomes) are simulated in real time. In this scenario, all authority is hosted at a central location from which all instructions depart. And the robots and machines on the factory floor are the physical result of what is happening in that virtual world.

At first glance, this ‘dictator model’ seems an ideal solution to deal with complex situations on the factory floor while ensuring that production targets are met. Technically, such a scenario is already perfectly feasible: the only things you need are a fast data connection between the physical machines in the production area and the ‘virtual brain’, and a lot of processing power.

There are however two caveats to this. The first is purely economic. Let us not forget that industrial settings are often complicated and competitive places where many actors collaborate (suppliers and partners – and sometimes also competitors). In such a context, protection of data, privacy and information is enormously important – which does not fit the ‘dictator model’ scenario where the central brain must have access to all possible types of data (including competitively sensitive data) to do its job properly. For many business leaders, having to share those data would be the ultimate nightmare.

Fig 2: Should we make digital twins of the factory in the cloud to realize a reliable communication between humans and machines? Although this ‘dictator model’ seems an ideal solution to deal with complex situations on the factory floor, there are two caveats: competitors working in the same factory don’t want to share data and a human employee needs to be able to intervene.

And the second caveat? Human unpredictability! Even if we can operate a factory in which the commercial interests of only one party are involved, the centrally controlled scenario falls to pieces as soon as one person walks around the factory; a person with their own autonomy and authority. Imagine for example that the human employee (the ‘creative architect’, as we labeled them earlier) notices that a robot is doing something wrong and gets involved to rectify the fault… At that moment, the whole system would come to a standstill, as the virtual brain would have lost all control.

Hence, this model might only be a valid one for industrial facilities that focus on the production of bulk goods, and where the role of humans is minimal (or – in the long run – perhaps even non-existent).

A new form of artificial intelligence: complex reasoning

In other words: whenever man and machine do have to work together, we will need to use different methods to cater for human unpredictability, and to ensure that robots can anticipate it.

“A particularly promising principle is that of ‘complex reasoning’ – a new form of artificial intelligence that can be used to teach machines how to reason autonomously and anticipate the actions of something (or someone) else. However, there is still a long way to go before we can put the principle of complex reasoning into practice.”

After all, artificial intelligence as we know it today, is based on ‘deep learning’ – a powerful technology to recognize patterns in huge amounts of data. In the meantime, we have mastered this technology, so now the goal is to take the next step and to have machines ask themselves the question: “How do my actions affect the actions of people around me?”

To make things even more complicated, we must throw this extra consideration into the mix: in an industrial setting, the foremost requirement is transparency (to make sure production targets can be met). But deep learning is actually the opposite of this, namely a ‘black box’: you train the system to recognize patterns, but you lose control over how that system comes to its conclusions.

Hence, an extra requirement of complex reasoning is that it must be sufficiently transparent (or ‘explainable’) for people to accept it, meaning that in the future we will be talking about ‘explainable AI’.

Lifelong learning: also for robots

In the run up to 2035, complex reasoning will become a new strategic research topic, with teams from across the globe studying how the underlying algorithms must be developed, implemented and optimized.

Furthermore, we will be confronted with the issue of how machines can continually improve their reactions and ways of anticipating actions. This means that new ‘reward systems’ based on implicit and explicit feedback signals must be developed.

“You can bet that, in future, the concept of ‘lifelong learning’ will no longer only apply to man, but also to machines…”

How is imec contributing to this future?

Imec holds a world-leading position in several of the technology domains that drive the creation of smart industries: from research into intelligent logistics and the Internet of Things up to human-machine interaction, making sense of big data, the creation of sensor systems for industrial applications, imaging technology, and so on.

Questions that our researchers are trying to answer include:

- How can we help companies reduce operational costs (such as production time and energy consumption) – and help them solve complex logistical puzzles, leveraging intelligent algorithms?

- How can we extend the advantages of holographic 3D technology or smart vision systems to domains such as smart entertainment and smart manufacturing?

- How can we optimally – and safely – accommodate human-machine interaction in production environments?

- How can we combine sensors, actuators and electronics in small and ultra-low power chips that continuously acquire data on production processes, storage and stock management?

- How can low-cost identification, tracking and sensing chips be integrated in plastic foil, thinner than paper?

- How can we turn the massive amounts of unstructured data that are generated by sensor networks into usable knowledge that makes companies more efficient?

Want to know more?

Today, the 3D reconstruction of, for example, tunnels or industrial buildings is a time-consuming and expensive process. Read more about LiBorg 2.0 – a robot for on-the-fly 3D mapping of environments, based on lidar technology.

In this article, you will learn how you can inspect the inside of complex quality products and avoid damaging them.

Learn more about Antwerp start-up Aloxy, a spin-off from imec and the University of Antwerp, which delivers plug-and-play IoT solutions for digitizing manual valves in the petrochemical industry and for asset management during maintenance and shutdowns.

In ‘The Internet of Unexpected Things’ a selection of IoT projects is presented in which imec collaborates closely with industrial partners.

How can we plug robots into the IoT? Found out more in this article.

Biography Pieter Simoens

Pieter Simoens (1982) is a professor at Ghent University, affiliated to imec. He specializes in distributed artificial intelligent systems. His research focuses, among other things, on the link between robots and the Internet of Things, continuously learning embedded devices and the study of how collective intelligence can arise from the collaboration of individual and autonomous agents.