Launch of the Intel® Xeon Phi™ Processor and Advancements to the Intel® Scalable System Framework Address Market Demands for Improved Performance, Energy Efficiency and Programmability

June 20, 2016 — As data volumes continue to explode and become more complex, new hardware, software and architectures are needed to drive deeper insight and accelerate new discoveries, business innovation and the next evolution of analytics in machine learning and the field of artificial intelligence.

A key to unlocking these deeper insights is the new Intel® Xeon Phi™ processor. As a foundational element of Intel® Scalable System Framework (Intel® SSF), the Intel® Xeon Phi™ product family is part of a complete solution that brings together key technologies for easy-to-deploy and high-performance clusters.

Solving the Biggest Challenges Faster with the Intel® Xeon Phi™ Processor Family1

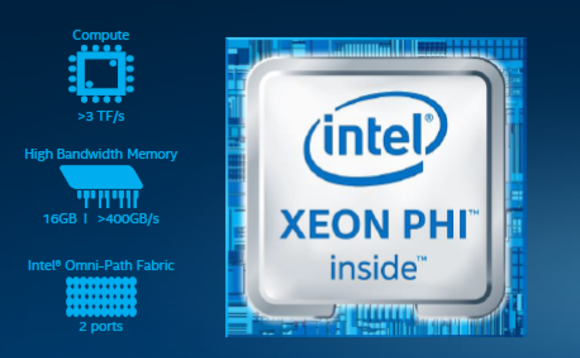

The new Intel Xeon Phi processor is Intel’s first bootable host processor specifically designed for highly parallel workloads, and the first to integrate both memory and fabric technologies. As a bootable x86 CPU, the Intel Xeon Phi processor scales efficiently without being constrained by a dependency on the PCIe bus like GPU accelerators. By eliminating this dependency, the Intel Xeon Phi processor offers greater scalability and is capable of handling a wider variety of workloads and configurations than accelerator products.

The integration of 16GB of high-bandwidth memory delivers up to 500 GB/s of sustained memory bandwidth for memory-bound workloads2, and the available dual-port Intel® Omni-Path Architecture (Intel® OPA) further reduces solution cost, power and space utilization. The Intel Xeon Phi processor is a general purpose CPU built on open standards, making software investments portable into the future.

Across a wide range of applications and environments – from machine learning to high-performance computing (HPC), the Intel Xeon Phi product family helps solve the biggest computational challenges faster and with greater efficiency and scale3. The product family also helps to drive new breakthroughs using high-performance modeling and simulation, visualization and data analytics.

Additional Intel Xeon Phi features and benefits, include:

Performance: Features up to 72 powerful and efficient cores with ultra-wide vector capabilities (Intel® Advanced Vector Extensions or AVX-512), raising the bar for highly parallel computing performance.

Scalability: Delivers data center-class CPU scalability and reliability for running high-performance workloads such as machine learning where scaling efficiency is critical for rapid training of complex neural networks.

Programmability: Offers binary-compatibility with Intel® Xeon® processors to allow for running any x86 workload. This optimizes asset utilization across the data center while the use of a common programming model increases productivity through a shared developer base and code reuse.

Investment Protection: Built on general-purpose x86 CPU architecture and open standards, with the support of a broad ecosystems of partners, programming languages and available tools – for superior flexibility, software portability and reusability.

Systems featuring the Intel Xeon Phi processor family are publicly available today with a broader selection expected to be available in September of this year. To date, Intel has shipped tens of thousands of units and expects to sell a total of more than 100,000 units this year. The product family’s broad ecosystem support includes more than 50 OEM, ISV and middleware partners. To learn more, visit www.intel.com/xeonphi/partners.

Machine Learning Goes Deeper with Intel® Xeon Phi™ Processors

Machine learning implementations require an enormous amount of compute power to run mathematical algorithms and process huge amounts of data. With these challenges in mind, Intel has expanded its range of technologies for machine learning with the release of the Intel® Xeon Phi™ processor family. The Intel® Xeon Phi™ processor offers robust performance for machine learning training models, and with the flexibility of a bootable host processor, it is capable of running multiple analytics workloads. Intel® Scalable System Framework-based clusters powered by the Intel Xeon Phi processors and available integrated Intel® Omni-Path Architecture, enable data scientists to run complex neural networks and run training models in significantly shorter time. In a 32-node infrastructure, the Intel Xeon Phi family offers up to 1.38 times better scaling than GPUs and in a 128-node infrastructure, the time to train models can be completed up to 50 times faster using the Intel Xeon Phi family3.

The Intel Xeon Phi family is complemented by the Intel® Xeon® processor E5 family, the most widely deployed infrastructure for machine learning4. Intel Xeon processor E5 v4 product family is well suited for machine learning scoring models and provides great performance and value for a wide variety of data center workloads. Together, these Intel Xeon processor families offer developers a consistent programming model for training and scoring and a common architecture that can be used for high-performance computing, data analytics and machine learning workloads.

New Intel® Scalable System Framework Reference Architecture

Designed for small clusters to the world’s largest supercomputers, Intel® SSF provides scalability and balance performance for both compute- and data-intensive applications, machine learning and visualization. Intel has released its first Intel® SSF reference architecture, providing a recommended baseline hardware and software configuration for an optimized HPC system. The Intel SFF reference architecture is supported by two reference designs that document specific HPC system requirements, including hardware and software elements and installation and configuration instructions. The new reference architecture and designs help systems builders simplify the design and validation processes and offers end users purchase guidance to more fully access the value from Intel® SSF to preserve broad software application portability. More information on the new reference architecture and design is available at: www.intel.com/SSF.

Simplifying Software Deployments with Intel® HPC Orchestrator

Intel® HPC Orchestrator is a new family of supported products that will simplify the implementation and ongoing maintenance of an HPC system software stack by reducing the amount of integration, testing and validation work required. The Intel HPC Orchestrator products, which are targeted for availability in the fourth quarter, are based on OpenHPC community software and provide professional services and technical support. The first product, Intel® HPC Orchestrator–Advanced, is a modular software stack that is built to offer customization, performance and scalability along with ease of use. More information on Intel® HPC Orchestrator features and benefits is available at: www.intel.com/hpcorchestrator.

Rapid Industry Adoption of Intel Omni-Path Architecture

Intel Omni-Path Architecture (Intel® OPA) is a new end-to-end fabric solution designed to cost-effectively improve the performance of HPC applications for entry-level to large-scale HPC clusters. Market adoption continues to rapidly grow with more than 80,000 nodes in the market and broad availability from system manufacturers shipping Intel OPA-based switches and server platforms, including: Dell*, Fujitsu*, Hitachi*, HP*, Inspur*, Lenovo*, NEC*, Oracle*, Quanta*, SGI*, Supermicro*, Colfax* and many others.

Major Intel OPA customer deployments, include:

Additional materials and multimedia is available at: https://www.intel.com/newsroom/isc.

Intel, the Intel logo, Xeon Phi and Omni-Path are trademarks of Intel Corporation in the United States and other countries.

*Other names and brands may be claimed as the property of others.