Most of us wonder how AI effortlessly generates text and answers complex queries. Large language models (LLMs) such as ChatGPT can translate languages, summarise articles, or even write poetry- all by simply following prompts. Yet, when we ask these intelligent models to recognize a specific object,say, your beloved long-haired cat named Snoofkin-they stumble surprisingly.

Why is recognizing your unique cat harder than writing an essay?

Language Models: Masters of Context

To understand why AI struggles with personalized image recognition, let’s first explore how language models learn. Models like GPT-4 excel at what’s called “in-context learning” (ICL). Give the model an example such as “Translate chien → dog,” and it can then correctly translate new words based on that example alone. This works smoothly because the models are trained extensively on text containing millions of examples of context pairs-think flashcards embedded within vast libraries of text.

Vision Models: A Different Story

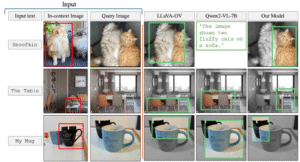

But add vision into the mix, and things change drastically. Modern Vision–Language Models (VLMs) such as GPT-4o or Qwen2-VL are great at general tasks, like captioning images or recognizing common objects. Yet, when tasked with something personalized, identifying Snoofkin in a new photo after seeing only a few examples-these sophisticated models become surprisingly confused. They tend to point at any cat-shaped blob rather than specifically Snoofkin.

Why the confusion? Unlike text, image training data is typically single-shot: one caption, one image. Rarely does training data show multiple images of the same specific entity across contexts. Hence, these models never learn the concept of recognizing the same specific instance across various images.

Bridging the Gap: IPLoc (In-context Personalised Localisation)

Enter IPLoc, a novel method from our recent research at the Weizmann Institute (in collaboration with TAU, MIT-CSAIL and more), designed to enhance VLMs’ ability to handle personalized visual tasks. IPLoc cleverly uses existing video-tracking datasets, which naturally contain multiple images of the same object, such as a cat, across different frames. We repurpose these sequences into conversational prompts-just like language models use text pairs-to teach models to track specific objects visually.

Here’s how it works:

- Video datasets: We leverage video tracking datasets, containing sequences where objects like pets or vehicles appear in consecutive frames.

- Dialogue formation: Each tracked object becomes a conversation: “Here’s Snoofkin” (with a bounding box), followed by, “Where is Snoofkin now?” for subsequent frames.

- Masked labels: To avoid models cheating by relying on familiar labels, we use pseudo-names (like changing “Airplane” to “Elizabeth”) forcing them to focus solely on visual details.

By using minimal additional training (just a 1% parameter adjustment via LoRA), models dramatically improve their ability to personalize visual recognition.

How Effective is IPLoc?

Our experiments show IPLoc significantly boosts model accuracy. On three personalization benchmarks, IPLoc-enhanced models showed 14–18 percentage point gains over unmodified models. Interestingly, as we provide more examples (up to eight), IPLoc models continue improving, a hallmark previously only observed in purely textual models.

Why Personalized Recognition Matters

One might wonder why personalized image recognition matters when tech giants push towards all-purpose VLMs. Models like GPT-4o are undeniably powerful but still struggle to differentiate between near-identical instances, such as your mug versus a similar stock image.

IPLoc suggests the future lies in hybrid solutions: large, general-purpose models supplemented with small adapters fine-tuned for personalized tasks. This combination offers high accuracy while maintaining privacy (as minimal adjustments can even run directly on user devices).

Real-World Applications

Imagine seamlessly managing your photo collections, quickly sorting images of your pets or family members without tedious manual labeling. In industrial settings, visual AI could efficiently track specific components on manufacturing lines, reducing the need to retrain entire detection systems when designs change. Similarly, augmented reality experiences could become deeply personalized, allowing precise annotation of your own tools or objects in a virtual workspace. Wildlife researchers could also greatly benefit, effortlessly tracking individual animals across thousands of images from trail cameras, significantly advancing conservation efforts.

While specialized few-shot detection models can address these tasks, there remains a critical need for a single, comprehensive model—capable of generating text and handling general tasks as GPT-4o already does—that can also perform precise object localization.

Conclusion

IPLoc demonstrates that the challenge in teaching AI to recognize your cat-or any personalized item-is less about model size and more about targeted training data. By carefully adapting visual contexts in training, we empower models to recognize individual objects reliably, bringing personalized AI closer to reality. As a result, Snoofkin can now confidently stand apart from any random cat meme, highlighting the potential for highly personalized AI interactions in everyday life.

About the author:

Sivan Doveh is completing her PhD at the Weizmann Institute of Science, is a research student at Google, and will join Stanford University as a post-doctoral researcher in autumn 2025.