Embracing traditional data services and the burgeoning requirements of the Internet of Things (IoT), the Internet of Everything (IoE), as it is now being referred to, is adding considerably to the pressure being applied to data communication networks and the storage capabilities of data centers. With the inevitable growth in this infrastructure that this rising demand is driving, the energy efficiency of these various Cloud services is inevitably and deservedly coming under increased scrutiny. Any measure that realizes valuable energy savings, including efficiency improvements in the supply of power to the many, many servers in these data centers, will not only help operators keep costs down but will also benefit the environment. Collaboration between leading companies in the power space, with each bringing to bear their own unique experiences, is one initiative that is providing a way forward.

Introduction: An Imminent Explosion of Data

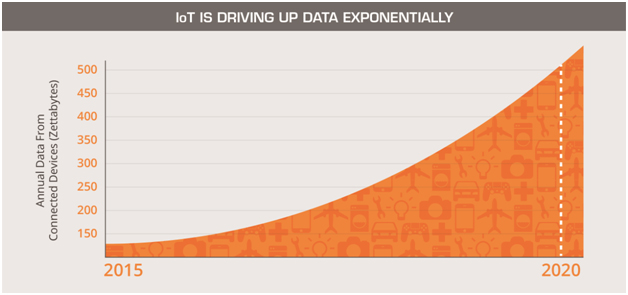

Retail operations already generate significant volumes of data – according to Cisco, a large store may collect 10GB of data every hour and transmit 1GB of that to a data center. Industrial operations can generate vastly higher levels of data, for example, automated manufacturing plants can generate 1TB per hour while a large mining operation can easily exceed 100TB per hour. With the addition of connected “Things”, such as sensors and controls enabling infrastructure management and security applications in commercial and residential properties, a veritable explosion of data is imminent.

Capturing all this data continuously is the easy part. Transforming it into useful information is what really counts and is where Cloud services are key. Data centers are at the heart of Cloud services and while their processing and storage capabilities continue to benefit from improvements in technology, it is their energy consumption that has increasingly become a matter of public concern. Over the typical three-year life of a server, the cost of powering it can exceed its purchase price. On top of this is the energy cost for running the cooling systems needed to maintain safe operating temperatures for all the electronic equipment. This is why there is now a major industry trend to siting new data centers in cooler climates, even undersea, and also close to plentiful sources of renewable energy such as hydroelectric power plants.

Figure 1. Connected devices are expected to produce 500 zettabytes of data annually by 2020.

Alleviating the Energy Consumption Concern

While looking at potentially establishing higher maximum equipment operating temperatures to save on cooling costs, operators also recognize the importance of improving the overall energy efficiency of data center equipment; to reduce the direct energy consumed but also the heat generated and hence the cost of cooling.

Maximizing efficiency at every point is vital, throughout the servers, their power supplies and through the system-management software. Despite this, peak power consumption continues to increase to meet the demands for increased computing capability and the consumption of a typical server board has increased from a few hundred watts to 2kW or 3kW today, and could reach 5kW or more in the future. As a result, there is a growing difference between the server’s minimum power at light load and its power at full-load. Fortunately power distribution architectures are becoming more flexible, with real-time adaptive capabilities that maintain optimal efficiency under all operating conditions.

Adapting the Power Architecture

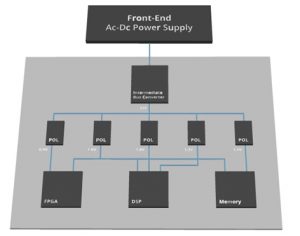

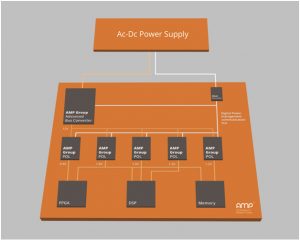

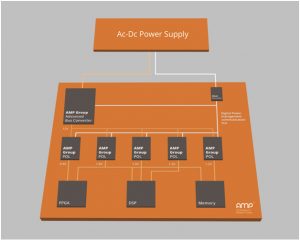

A distributed power architecture typically comprises an Intermediate Bus Converter (IBC) that operates from a 48Vdc input supplied by a front-end AC/DC converter, as shown in figure 1. The IBC provides a 12V intermediate bus that supplies low-voltage DC-DC point-of-load (POL) converters positioned close to major power-consuming components on the board, such as processors, System on Chips or FPGAs. Multiple POLs may be used to supply core, I/O and any other voltage rails. The IBC’s 48Vdc input and 12V output voltages were historically chosen to minimize down-conversion losses and losses proportional to current and distance when supplying typical server boards. However, given the changes in core voltage, current draw, maximum power and the difference between full-load and no-load power, these fixed voltages are less suited to maintaining optimal efficiency in modern systems. The ability to set different voltages, and change these dynamically in real-time, is needed to allow a system to adapt continuously for best efficiency.

Figure 2. The traditional fixed distributed power architecture suited earlier generations of servers

Adaptive Control Requires a Common Protocol

PMBus is an industry-standard protocol for communicating with digitally controllable power supplies from the front-end, through the advanced bus and to the point-of-load converters (figure 2). By monitoring the status of these converters a host controller can optimize input and output voltages and send commands to manage other aspects of device operation, such as enable/disable, voltage margining, fault management, sequencing, ramp-up, and tracking.

The controllability enabled by PMBus is allowing system designers to power architectures that are increasingly software defined and able to respond in real-time for optimum efficiency. Some of today’s most powerful techniques for optimizing efficiency include Dynamic Bus Voltage (DBV) optimization, Adaptive Voltage Scaling (AVS), and multicore activation on demand.

Figure 3. Energy efficiency can be optimized on the fly with PMBus-compatible converters

DBV provides a means of adjusting the intermediate bus voltage dynamically to suit prevailing load conditions. At higher levels of server-power demand, PMBus instructions can command a higher output voltage from the IBC in order to reduce the output current and hence minimize distribution losses.

AVS is a technique used by leading high-performance microprocessors to optimize supply voltage and clock frequency to ensure processing demands are always satisfied with the lowest possible power consumption. This also provides automatic compensation for the effects of silicon process variations and changes in operating temperature. To support AVS, the PMBus specification has recently been revised to define the AVSBus, which allows a POL converter to respond to AVS requests from an attached processor.

Multicore activation on demand provides a means of activating or powering down the individual cores of a multicore processor in response to load changes. Clearly, de-activating unused cores at times of low processing load can help to gain valuable energy savings.

Continuously optimizing the power-conversion architecture and bus voltages will yield improvements in each converter. In a power supply comprising an IBC operating at 93% and a POL operating at 88%, an improvement of just 1% in each stage can reduce the power dissipated from 18.1% of the input power to 16.3%. This not only represents a 10% reduction in power losses, but also relieves the load on the data-center cooling system thereby delivering extra energy savings.

Software Defined Power Requires Collaboration

While these first adaptive control features mark the beginning of software-defined power architectures, many additional and even more powerful techniques are expected to emerge, assisted by the arrival in the market of digitally-controllable PMBus-compatible IBC and POL supplies from a range of vendors. PMBus is vital in supporting the power supply designs that are needed to meet the IoT challenge. However an issue that still has to be addressed is the “plug and play” compatibility between supplies that appear to offer similar specifications but behave differently when sent the same PMBus command.

The formation of the Architects of Modern Power® (AMP) Group in October 2014 has further strengthened the case for digital control through its activities in specifying standards for the interoperability of IBC and POL supplies. This includes standardizing the interpretation of PMBus commands to ensure that all supplies that comply with AMP Group® standards will operate in the same way in response to a given command.

One of the key objectives of the AMP Group in streamlining the design of digital power was to enable true second-source flexibility for system OEMs. In going beyond the standards set by power industry bodies such as POLA and DOSA, the AMP Group has not only created standards that provide mechanical, electrical and software compatibility, but each of its standards is represented by commercially available products from at least two of its founding member companies: CUI, Ericsson Power Modules and Murata. Furthermore, the members have set out to collaborate on a technology and product roadmap to keep one step ahead the IoE power challenge. The AMP Group classically demonstrates the adage that “the sum is greater than the parts” as collaboration between its members each, with their own unique experience, benefits not just those member companies but also the industry at large.

Conclusion

Industry and commerce, driven by the needs of the consumer, are increasingly dependent on Cloud data services as we rapidly move towards the Internet of Everything. The resulting huge quantities of data need to be communicated and processed quickly to provide useful information and then stored for future reference. As the demands on Cloud data centers increase, energy efficiency is becoming an increasingly important factor governing operating costs. At the board level, energy lost during power conversion can be reduced by adjusting bus voltages as load conditions change. PMBus-compatible converters allow real-time software-based control to achieve a valuable reduction in these losses. At the system-level, this combination of optimized hardware and software will greatly improve power utilization in data centers as capacity demands continue to rise. To fully realize the promise of software defined power, it is going to require collaboration between organizations with unique technologies and competencies, like the AMP Group, to meet this looming IoE power challenge head-on.

For more information on the AMP Group, visit our site