Introduction

As you may have seen, Virtual Reality (VR) is getting increasingly popular. From its modern origins on desktop, it has quickly spread to other platforms, mobile being the most popular. Every time a new mobile VR demo comes out I am stunned by its quality; each time it is a giant leap forward for content quality. As of today, mobile VR is leading the way; based on our everyday phone it makes it the most accessible and because you are not bound to a particular location and wrapped in cables, you can use it wherever you want, whenever you want.

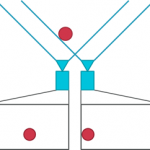

- Fig. 1: Stereo camera setup

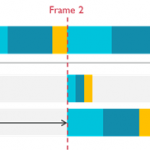

- Fig. 2: Regular Stereo job scheduling timeline

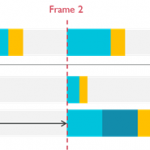

- Fig. 3: Multiview job scheduling timeline.

As we all know, smooth framerate is critical in VR, where just a slight swing in framerate can cause nausea. The problem we are therefore facing is simple, yet hard to address. How can we keep reasonable performance while increasing the visual quality as much as possible?

As everybody in the industry is starting to talk about multiview, let us pause and take a bit of time to understand multiview, what kind of improvements one can expect and why you should definitely consider adding it to your pipeline.

Stereoscopic rendering

What is stereoscopic rendering? The scope of this post doesn’t cover the theoretical details behind this question, but the important point is that we need to trick your brain into thinking that the object is real 3D – not screen flat. To do this you need to give the viewer two points of view on the object, or in other words, emulate the way eyes work. In order to do so we generate two cameras with a slight padding, one on the left, the other on the right. If they share the same projection matrix, obviously their view matrices are not the same. That way, we have two different viewpoints on the same scene.

Now, let us have a look at an abstract of a regular pipeline for rendering stereo images:

1. Compute and upload left MVP matrix

2. Upload Geometry

3. Emit the left eye draw call

4. Compute and upload right MVP matrix

5. Upload Geometry

6. Emit the right eye draw call

7. Combine the left and right images onto the backbuffer

We can obviously see a bit of a pattern here as we are emitting two draw calls, and sending the same geometries twice. If Vertex Buffer Objects can mitigate the latter, doubling the draw calls is still a major issue as it is adding an important overhead on your CPU. That is where multiview kicks in, as it allows you in that case, to render the same scene with multiple points of view with one draw call.

- Fig. 4: Scene used to measure performances

- Fig. 5: Relative CPU time between multiview and regular stereo. The smaller the better, with the number of cubes on the x-axis and the relative time on the y-axis. Multiview in red, and regular stereo in blue

- Fig. 6: Relative GPU time between multiview and regular stereo. The smaller the better, with the number of cubes on the x-axis and the relative time on the y-axis. Multiview in red, and regular stereo in blue

Multiview Double Action Extension

Before going into the details of the expected improvements, I would like to have a quick look at the code needed to get multiview up and running. Multiview currently exists in two major flavors: OVR_multiview and OVR_multiview2. If they share the same underlying construction, OVR_multiview restricts the usage of the gl_ViewID_OVR variable to the computation of gl_Position. This means you can only use the view ID inside the vertex shader position computation step, if you want to use it inside your fragment shader or in other parts of your shader you will need to use multiview2.

As antialiasing is one of the key requirements of VR, multiview also comes in a version with multisampling called OVR_multiview_multisampled_render_to_texture. This extension is built against the specification of OVR_multiview2 and EXT_multisampled_render_to_texture.

Some devices might only support some of the multiview extensions, so remember to always query your OpenGL ES driver before using one of them. This is the code snippet you may want to use to test if OVR_multiview is available in your driver:

Fig. 7: Example of an application using foveated rendering

Fig. 8: A different reflection for each eye, demonstrated here in Ice Cave VR

In your code multiview manifests itself on two fronts; during the creation of your frame buffer and inside your shaders, and you will be amazed how simple it is to use it.

That is more or less all you need to change in your engine code. More or less, because instead of sending a single view matrix uniform to your shader you need to send an array filled with the different view matrices.

Now for the shader part:

Simple isn’t it?

Multiview will automatically run the shader multiple times, and increment gl_ViewID_OVR to make it correspond to the view currently being processed.

For more in depth information on how to implement multiview, see the sample code and article “Using Multiview Rendering”.

Why using Multiview?

Now that you know how to implement multiview, I will try to give you some insights as to what kind of performance improvements you can expect.

The Multiview Timeline

Before diving into the numbers, let’s discuss the theory.

In this timeline, we can see how our CPU-GPU system is interacting in order to render a frame using regular stereo. For more in depth information on how GPU scheduling works on Mali, please see Peter Harris’ blogs.

First the CPU is working to get all the information ready, then the vertex jobs are executed and finally the fragment jobs. On this timeline the light blue are all the jobs related to the left eye, the dark blue to the right eye and the orange to the composition (rendering our two eyes side by side on a buffer).

In comparison, this is the same frame rendered using multiview. As expected since our CPU is only sending one draw call, we are only processing once on the CPU. Also, on the GPU the vertex job is smaller since we are not running the non-multiview part of the shader twice. The fragment job, however, remains the same as we still need to evaluate each pixel of the screen one by one.

Relative CPU Time

As we have seen, multiview is mainly working on the CPU by reducing the number of draw calls you need to issue in order to draw your scene. Let us consider an application where our CPU is lagging behind our GPU, or in other words is CPU bound.

In this application the number of cubes is changing over time, starting from one and going up to one thousand. Each of them is drawn using a different draw call – obviously we could use batching, but that’s not the scope here. As expected, the more cubes we add, the longer the frame will take to render. On the graph below, where smaller is better we have measured the relative CPU time between regular stereo (Blue) and multiview (Red). If you remember the timeline, this result was expected as multiview is halving our number of draw calls and therefore our CPU time.

Relative GPU Time

On the GPU we are running vertex and fragment jobs. As we have seen in the timeline (Fig. 3), they are not equally affected by multiview, in fact only vertex jobs are. On Midgard and Bifrost based Mali GPUs only multiview related parts in the vertex shaders are executed for each view.

In our previous example we looked at relative CPU time, this time we have recorded the relative GPU Vertex jobs time. Again, the smaller the better, regular stereo in blue and multiview in red.

The savings are immediately visible on this chart as we are no longer computing most of the shader twice.

Wrap it up

From our measurements multiview is the perfect extension for CPU bound applications, in which you can expect between 40% and 50% improvements. If your application is not yet CPU bound multiview should not be overlooked as it can also somewhat improve your vertex processing time at a very limited cost.

It is noteworthy that multiview is rendering to an array of textures inside a framebuffer, thus the result is not directly ready for the front buffer. You will first need to render the two views side by side, this composition step is mandatory, but in most cases the time needed to do so is small compared to the rendering time, and can thus be neglected. Moreover, this step can be integrated directly in the lens deformation or timewarp process.

Multiview Applications

The obvious way, and the one already discussed in this article, is to use multiview in your VR rendering pipeline. Both of your views are then rendered using the same draw calls onto a shared framebuffer. If we try to think outside the box though, it opens up a whole new field in which we can innovate.

Foveated Rendering

Each year sees our device screen getting bigger and bigger, our content becoming increasingly more complicated and our rendering time staying the same. We have already seen what we could save on the CPU side but sometimes fragment shaders are the real bottleneck. Foveated rendering is based on the physical properties of the human eye where only 1% of our eye (called the fovea), is mapped to 50% of our visual cortex.

Foveated rendering uses this property to only render high resolution images in the center of your view, allowing us to render a low resolution version on the edges.

For more information on foveated rendering and eye tracking applications, you can have a look at Freddi Jeffries’ blog Eye Heart VR. Stay tuned for a follow-up of this blog on foveated rendering theory.

We then need to render four versions of the same scene, two per eye, one high, one low resolution. Multiview makes this possible by sending only one draw call for all four views.

Stereo Reflections

Reflections are a key factor for achieving true immersion in VR, however, as for everything in VR it has to be in stereo. I won’t discuss the details of real time stereo reflections here, please see Roberto Lopez Mendez’s article Combined Reflections: Stereo Reflections in VR for that. In short, this method is based on the use of a secondary camera rendering a mirrored version of the scene. Multiview can help us achieve the stereo reflection at little more than the cost of a regular reflection, thus making real time reflections viable in mobile VR.

Conclusions

As we have seen throughout this article, multiview is a game changer for mobile VR as it allows us to unload our applications and finally consider the two similar views as one. Each draw call we save is a new opportunity for artists and content creators to add more life to the scenes and improve the overall VR experience.

If you are using your custom engine and OpenGL ES 3.0 for your project, you can already start working with multiview on some ARM Mali based devices, like the Samsung S6 and S7. Multiview is also drawing increased attention from industry leaders. Oculus, starting from Mobile SDK 1.0.3, is now directly supporting multiview on Samsung Gear VR and if you are using a commercial engine such as Unreal, plans are in progress to support multiview inside the rendering pipeline.